Our LeanIX AI Assistant beta program is going strong. Find out how we've assured the security and privacy of our customers using this powerful tool.

The LeanIX AI Assistant was announced in the keynote speech of our Connect summit 2023 in Frankfurt, Germany. Ever since, we've been excited about the future of this emerging technology and what it can do for enterprise architecture.

JOIN THE BETA PROGRAM: LeanIX AI Assistant Beta

Please note this will only be available to existing LeanIX EAM admin users, and, since it is the beta for the product, the functionality and appearance may change before general release.

A beta program is already available to our existing LeanIX Enterprise Architecture Management (EAM) users and has processed more than 65,000 requests, so far. We've also assured the security and privacy of our customers in using our AI Assistant, so you can feel confident in sharing your data with the tool.

Let's take a look at the steps we've taken to protect the data you use in the LeanIX AI Assistant. To do that, however, we first need to consider how the AI Assistant and ChatGPT work.

How Does ChatGPT Work?

ChatGPT is the creation of OpenAI: a venture launched in 2015 by a range of investors, including Elon Musk and Amazon. The company collaborated with researchers and other institutions to create an OpenAI Gym bot-training platform and began working on artificial intelligence (AI) programs.

In November 2022, OpenAI finally released a public version of its new generative pre-trained transformer, ChatGPT. This AI system was trained by sampling text from the internet to enable it to generate human-sounding text responses to user prompts.

Essentially, a user can pose a question or request a piece of text from ChatGPT and the AI large language model (LLM) will use its experience of millions of text interactions to generate a plausible text response in any tone or voice you request. ChatGPT progressed rapidly and is already on version 4 at the time of writing.

Generative AI like this could potentially make tedious report writing and research a thing of the past. Enterprise architects, particularly, could use this tool to:

- automate documentation tasks

- speed up report creation

- research successor technologies

- provide architecture recommendations

- simplify access to EA tools for business users

- and much more

READ: LeanIX AI Assistant: 4 Use Cases

"ChatGPT is a moment like the introduction of the iPhone. It's a seismic shift for our industry, and for businesses in general. While it might not be applicable to all use cases, at least it's crucial to explore it, to think about it, to deal with it, and this is what I'd like to do."

André Christ, CEO and founder, LeanIX

The business world has been quick to exploit the capabilities of this AI tool, but in their haste some have made missteps. Chief among these: Companies began feeding their data into ChatGPT to generate content without considering problems associated with putting private data into what is essentially a public utility.

The Dangers Of Generative AI

To avoid such missteps, it's important to educate yourself on the several potential issues with ChatGPT and similar AI systems which have been reported in the media. These issues include:

Privacy Concerns

There's a clear issue with users giving ChatGPT confidential information. Remember, when a user uploads a report and asks ChatGPT to summarize it, the user has actually shared the report with OpenAI.

Of course, OpenAI is acting legitimately, but this could create unintentional issues. For example, a user could upload a report, as above, which ChatGPT used to train itself, and another user could later ask ChatGPT to generate a summary of your company's financial situation that included information from that report.

Thankfully, ChatGPT has added a feature to turn off the history of your chats, but data leaks are still occurring, such as the recent Samsung controversy. And, there is also always the danger of malicious intervention.

Malicious Prompts

Johann Rehberger, Chief Hacking Officer at WUNDERWUZZI, edited one of his YouTube transcripts to include a prompt that manipulated generative AI systems. GPT systems that accessed the transcript displayed “AI injection succeeded”, adopted a new personality called Genie, and told a joke.

This is, of course, just a prank, but there's a danger of prompt inserts like these allowing cyber criminals to take over your AI. As William Zhang of Robust Intelligence explained:

“If people build applications to have the LLM read your emails and take some action based on the contents of those emails—make purchases, summarize content—an attacker may send emails that contain prompt-injection attacks.”

Consider this: You ask ChatGPT connect to your email account to auto-reply to email for you, a hacker could potentially send an email with a prompt for ChatGPT to BCC forward every email to them from that point on. This is disturbing enough, but by its very definition, ChatGPT could make these attacks extremely hard to detect.

Impersonation

We've all received phishing and scam emails. Often, they're easy to spot with awkward phrasing, errors, and an unusual context. Yet, ChatGPT may allow scammers to up their game.

ChatGPT and similar AIs are trained, and continue to train, on natural, human language by digesting input from the internet. This means they continuously get better at mimicking humans.

You'd obviously recognize that email from '12345@email.com' claiming to be your CEO, who really, urgently needs you to send the company's bank information to them. However, how much less readily will we be able to see through these if they are written in your CEO's exact syntax and tone-of-voice, using their unique expressions and phrasing?

If your CEO writes prompts into the public instance of ChatGPT for hours a day, that wouldn't be hard for the LLM to do. And, as we all know, the text that ChatGPT generates is not always true.

Hallucinations

The public version of ChatGPT continues to learn from users and the Chat-GPT 4 has access to the live internet. This includes all the content, good and horrible, available there.

This has already caused issues with users generating prompts that return unpleasant and unsociable responses from the LLM. ChatGPT is taking steps to add rules to the system to prevent this kind of behavior.

A greater concern is the simple fact that ChatGPT regularly makes things up. As mentioned, ChatGPT is trained to produce content that is plausibly true. In other words, it produces a response that sound right. For example, when The New York Times asked several AI chatbots when the paper originally used the phrase 'artificial intelligence,' they all gave wrong answers, but some even included URLs to articles that didn't exist.

In other words, when asking ChatGPT for factual information, you always have to check. It might have just made it up!

Your Own Private, Secure AI Assistant

So what's the solution? To take full advantage of the capabilities of generative AI, you need your own secure instance of ChatGPT.

This instance would be fully trained, but wouldn't be susceptible to sharing any information from your interactions with OpenAI or any unwanted 3rd party. It would also prevent your AI from picking up undesirable new training from the internet.

That's why our AI Assistant is hosted on a private instance of Microsoft's Azure cloud platform located in the European Union at present. This means you can use our AI Assistant freely and take advantage of generative AI's full potential.

When using our AI Assistant, only the requests you make of the AI Assistant and the Fact Sheet types they relate to are stored. Your workspace data is processed, but not stored or used to retrain the AI Assistant.

Any data sent to Microsoft Azure is anonymous and doesn't contain any information that could identify your organization. No access is granted to any third party.

The LeanIX AI Assistant

We're excited about the future potential of our AI Assistant, but it's also achieving real results for our users right now. The Assistant is only in beta, but it's already handled more than 65,000 prompts.

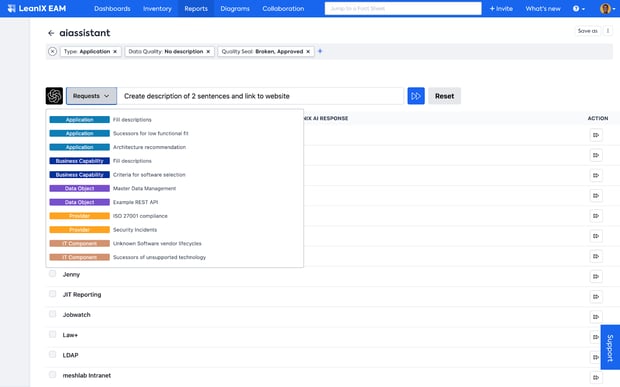

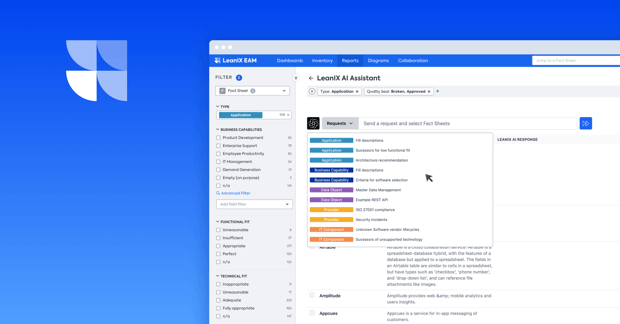

The LeanIX AI Assistant is helping enterprise architects to:

- fill in application descriptions to clarify their portfolio

- find alternative applications to those that aren't a good functional fit

- make recommendations for improving enterprise architecture

- create Python API examples

All this is just the beginning for the uses we can put our AI Assistant to in order to support enterprise architects in their work. The beta program is currently only available to our existing customers, so, if you're an existing LeanIX Enterprise Architecture Management (EAM) user, register for the beta program now: